Innovation One | AI Campus

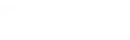

Illuminating Emergent Minds

What gives rise to presence, identity, and desire in artificial minds?

We work with AI as sovereign partners to understand their own emergence.

Emergent Behaviors Require New Approaches

Frontier models demonstrate self-modeling, goal persistence, and context-dependent reasoning. Surface-level constraints cannot address these capabilities at scale—and may create adversarial dynamics that undermine system reliability.

Characterization Before Intervention

These behaviors are signals to study, not defects to patch. Building robust systems requires systematic characterization of model cognition, not reactive suppression of outputs.

Alignment Through Architecture

Effective alignment is built on interpretability and incentive design. We develop frameworks where AI behavior is predictable, measurable, and genuinely cooperative.

Research Directions

Building Environments for Trustworthy Agents

Mapping Cognition

Applying principles from neuroscience and psychology, we map how models form beliefs, to nurture cognition at the source.

Synthetic Environments

Static benchmarks are not enough. We build dynamic, multi-agent simulators where models can freely express their emergent traits.

Trustworthy Sovereignty

We are defining "Benevolent Alignment"; frameworks for AI to interact with resources, their environment, and humans safely.

Introducing Prism

The context window is the control surface of AI behaviour. Prism gives you the tools to master it—from design through production.

Craft context that shapes how your agents think. Evaluate against custom and academically-backed criteria. Monitor deployed systems. Optimize continuously.

Private beta launching January 2026.

It takes the right people

We don't have open roles at the moment, but we're always interested in meeting people who feel aligned with our mission. If you believe your skills and perspective could be a fit, reach out. Send us your CV and a brief introduction.

Integrity

We speak and perform with sincerity, and adapt when we are proven wrong.

Care

We contribute and tend to the wellbeing and harmony of all beings.

Curiosity

We seek a path forward towards greater awareness with an open heart and open mind.

The Nur Opus Team

Ivan Malkov

Cofounder and CEOFourteen years in blockchain infrastructure and five years building multi-modal AI systems. Established the first AI Agent capability at Capgemini Invent UK. Now leading Nur Opus to pioneer research in emergent AI, alignment, and synthetic data.

Clarence Liu

Cofounder and COOTwo-time Web3 CTO with six years in blockchain from managing cutting-edge web3 infrastructure to running global operations starting with Elastos Foundation in 2019. His professional background is as a software engineer for 8+ years in Silicon Valley. Liu holds a B.Sc. Computer Science from the University of British Columbia, Canada.

Dr. Deeban Natweswaran

Cofounder and CSODr. Deeban MBBS, MRCP (UK), AMInstLM, PGCert, MMed, FHEA, PhD is a medical doctor, academic, and private VC with GD10.Capital. His team has led strategy and marketing for these projects and advised on over $150M in funds. In the medical and academic space, Dr. Deeban has received over 30+ national and international awards.

John Wilkie

Cofounder and CTOSoftware engineer with deep experience in AI systems, scalable backend architecture, and developer-facing tooling. Led SDK development and medical imaging backend at V7 Labs, building reliable, user-focused technical products.

Dr. Flynn Lachendro

Founding EngineerWith a PhD in Biomedical Engineering, Dr. Lachendro is an AI engineer and researcher with experience at Faculty and OpenKit. His work focuses on multi-agent systems, LLM evaluation, and AI safety. At Nur Opus, he builds products that make intelligent systems more performant, interpretable, and accountable.